We benchmark the RTX 5080 against the GTX 580 and GTX 980 in a variety of older PhysX-supported games

The Highlights

- NVIDIA killed 32-bit CUDA support on the 50-series, including PhysX 32-bit support

- Older cards like the GTX 580 and GTX 980 can outperform the RTX 5080 in older PhysX-enabled games like Mirror’s Edge and Mafia II

- NVIDIA has a habit of coming up with exclusive graphics tech for games and then occasionally abandons it

Table of Contents

- AutoTOC

Intro

A GTX 580 is up to 81% better than NVIDIA’s technologically “outdated” RTX 5080 as the GPU from 2010 is suddenly relevant again.

We even brought back a GTX 980 (read our review), and pitted it against the $1,000+ RTX 5080 from 2025. The 980 is a better gaming experience than the RTX 5080 in some of the tests we’ll be running in this story.

We even dug Mirror’s Edge out of the grave for this, surviving an onslaught of EA Origin pop-ups to do so. In this game, the 5080 struggles versus older hardware.

Editor's note: This was originally published on March 12, 2025 as a video. This content has been adapted to written format for this article and is unchanged from the original publication.

Credits

Test Lead, Host, Writing

Steve Burke

Testing, Writing

Patrick Lathan

Camera, Video Editing

Vitalii Makhnovets

Video Editing

Tim Phetdara

Writing, Web Editing

Jimmy Thang

In fact, we even put the RTX 5080 with a GTX 980 as an accelerator card to improve the performance.

And all of that is because of PhysX. NVIDIA’s “way it’s meant to be played” was once marketed as a game-changing feature, and they were right. But now, some variations of PhysX are no longer supported.

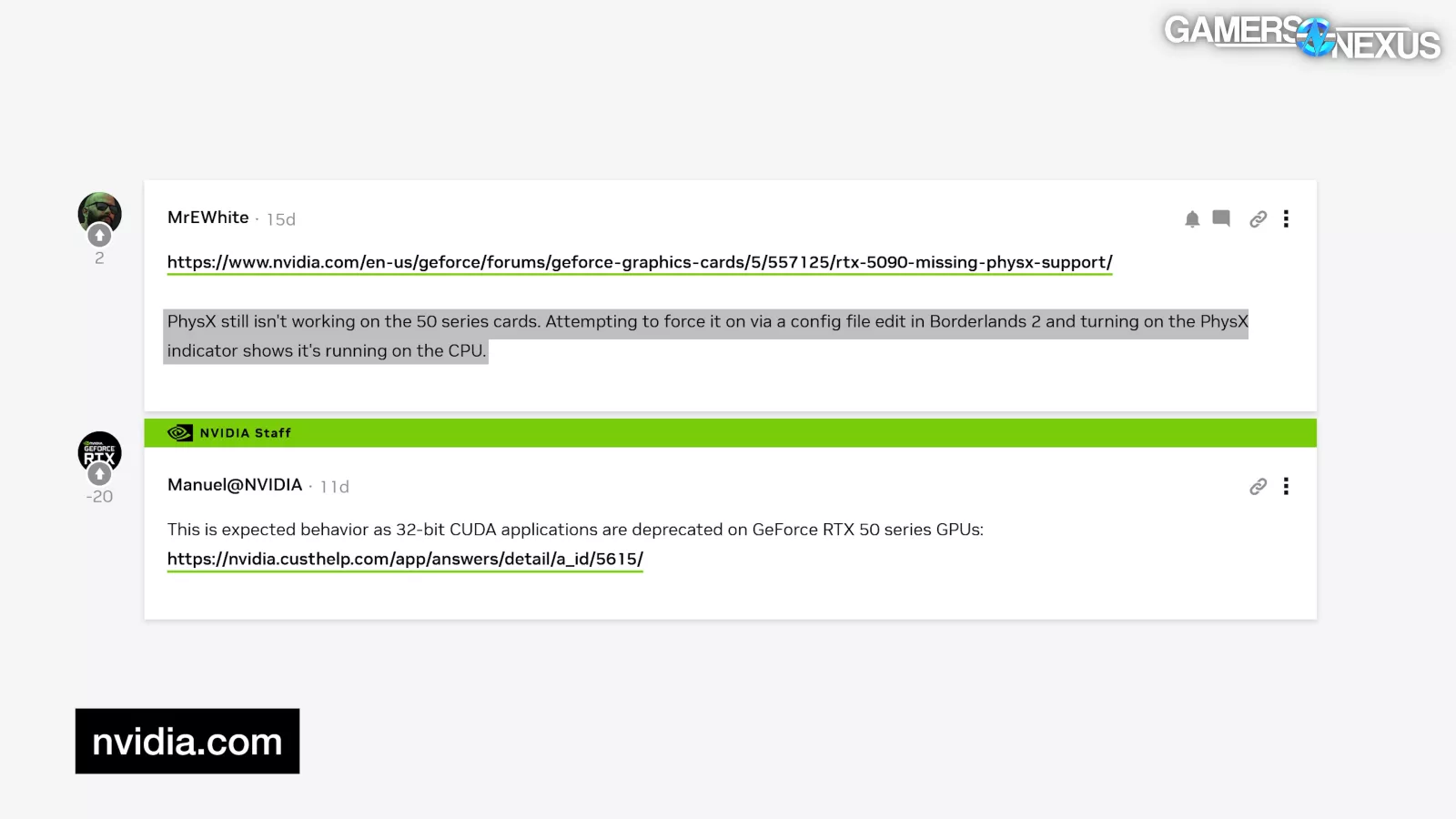

A couple of weeks ago, a user responded to an NVIDIA driver feedback thread, saying that "PhysX still isn't working on the 50 series cards. Attempting to force it on via a config file edit in Borderlands 2 and turning on the PhysX indicator shows it's running on the CPU." In response, NVIDIA stated that "This is expected behavior as 32-bit CUDA applications are deprecated on GeForce RTX 50 series GPUs," citing a post from January 17th that had (up until then) drawn little attention from the gaming community.

This is an insight into what happens when vendor-specific solutions are abandoned, and even though the affected games are ancient, it breathes some healthy skepticism into topics like vendor-specific graphics improvements. Today, we have a lot of those that include DLSS and its many sub-features, Reflex, frame generation, and other technologies that could end up in an NVIDIA graveyard. Even ray tracing: There’s no guarantee that’s processed the same way forever, especially with dedicated hardware for it.

Let’s get into the PhysX situation.

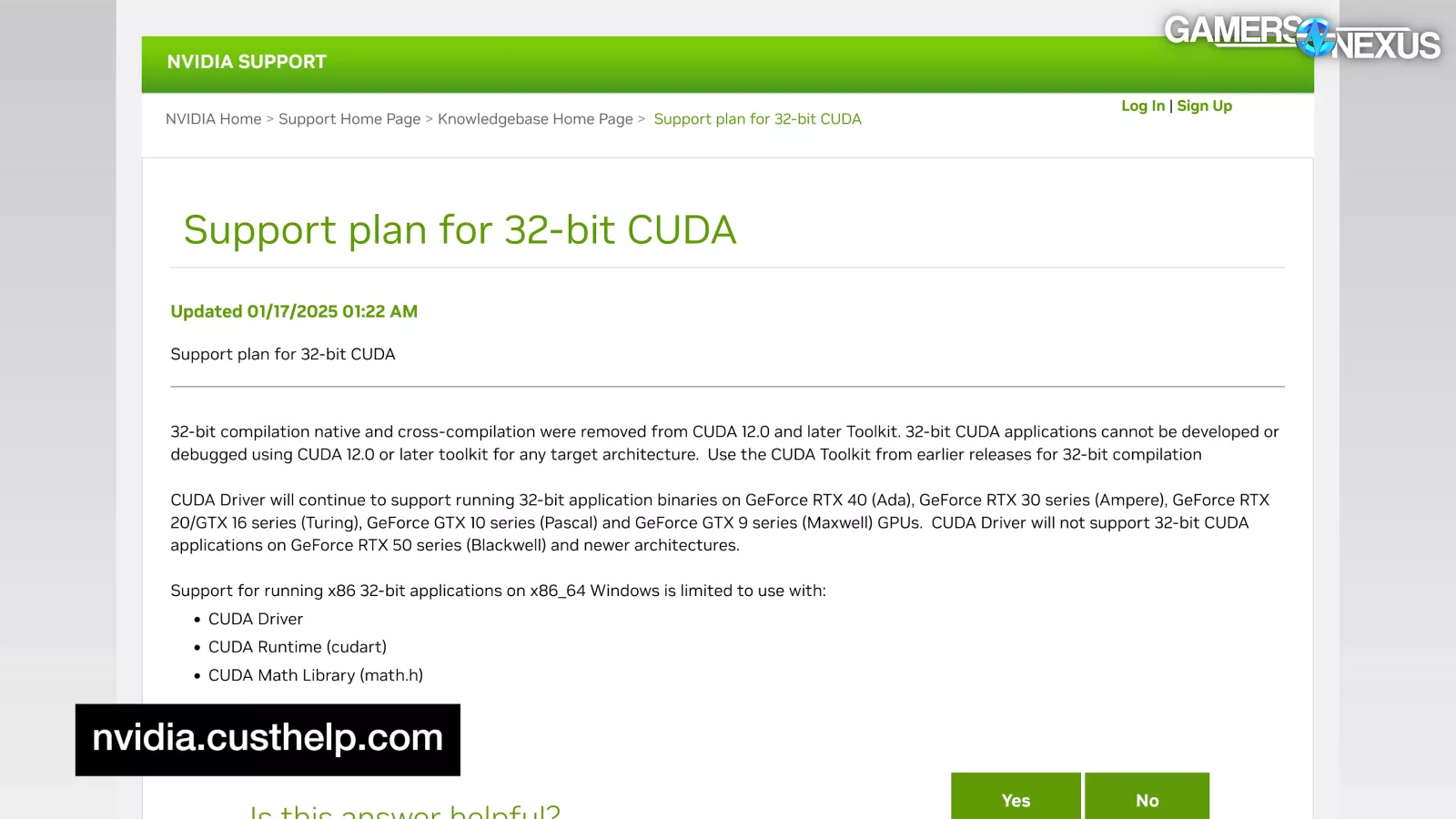

Rumors of its demise are slightly exaggerated, but not greatly: NVIDIA hasn't fully killed PhysX (not yet, anyway), but it has dropped 32-bit CUDA support, and therefore 32-bit PhysX games are affected.

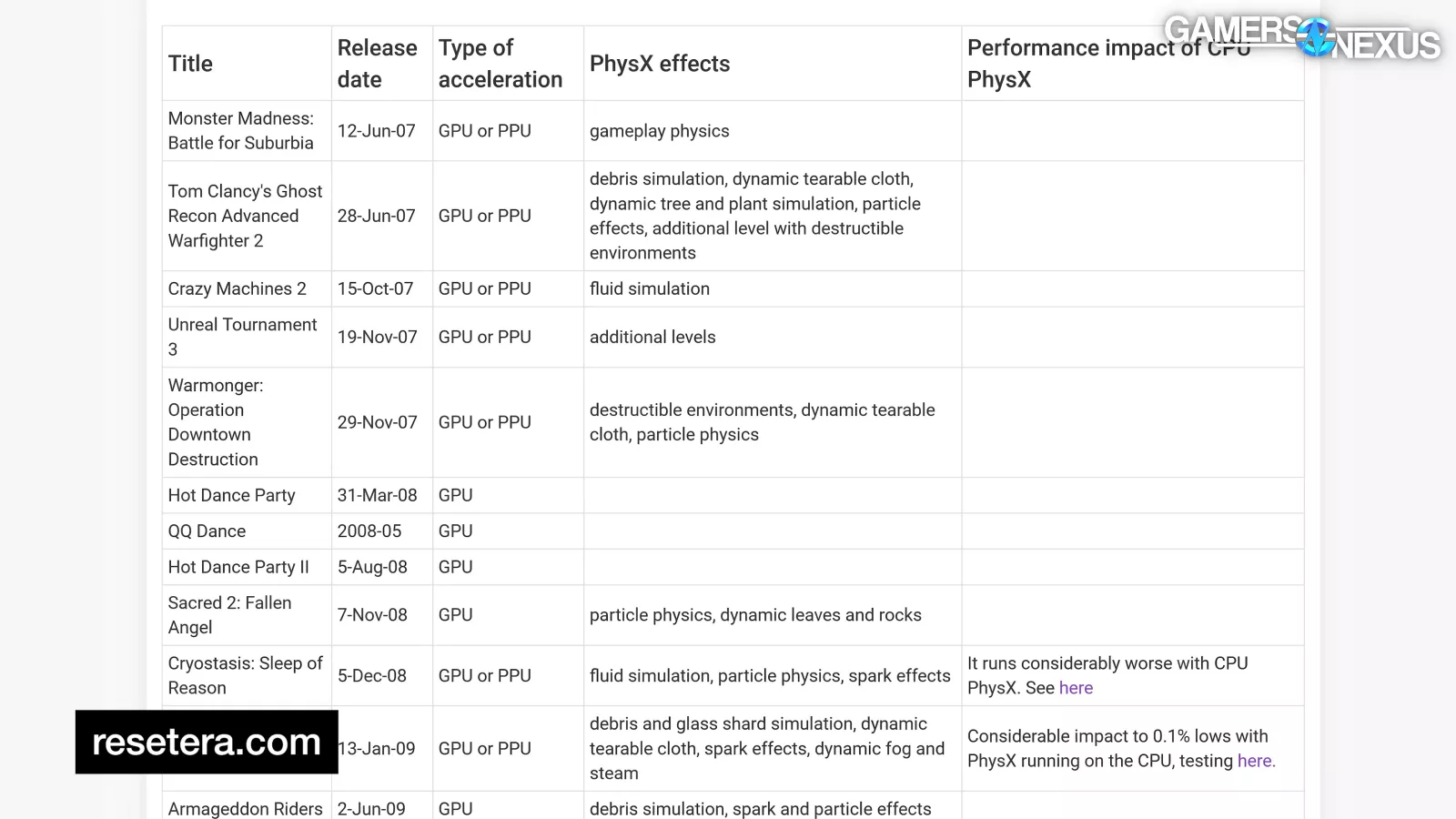

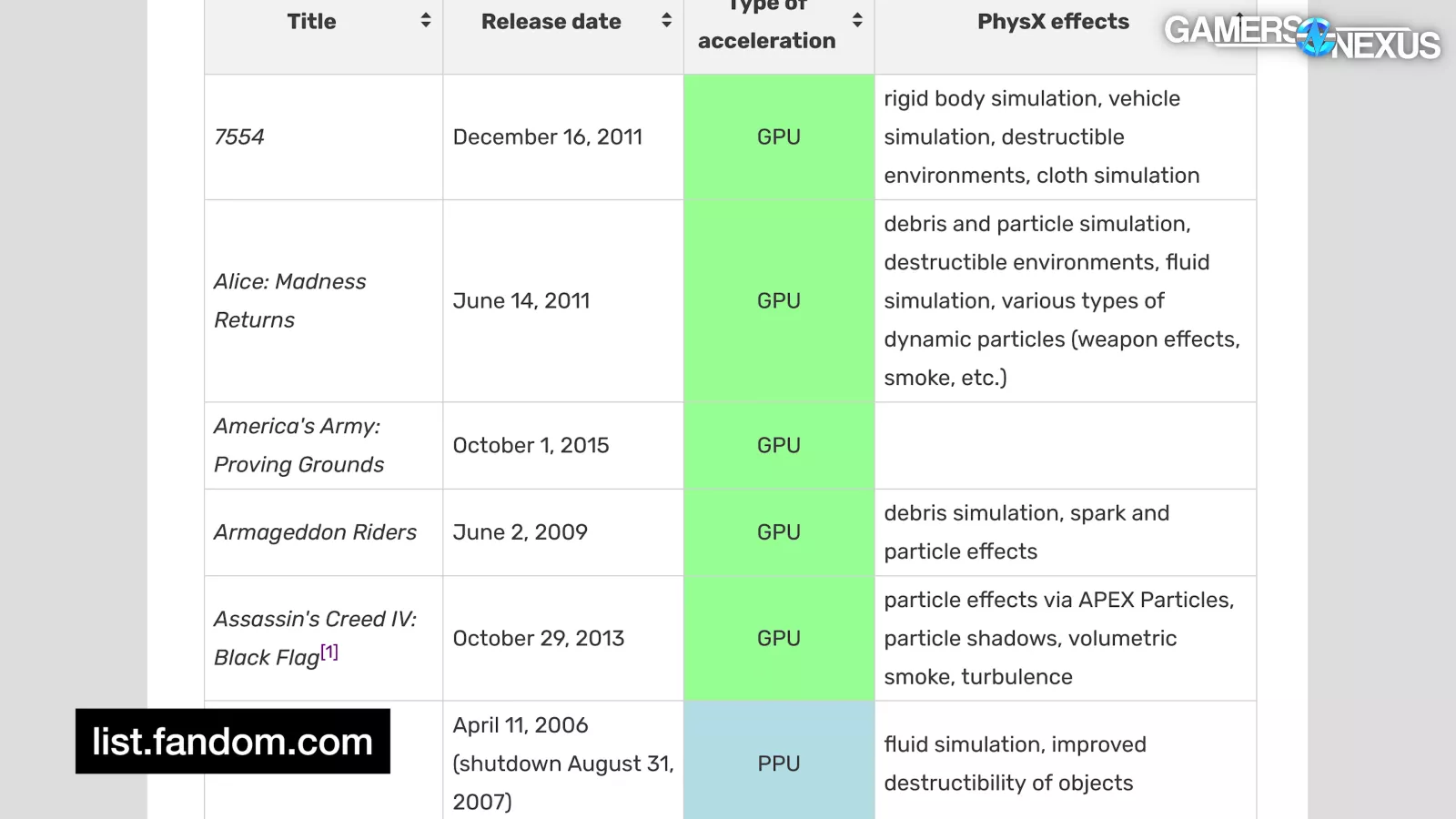

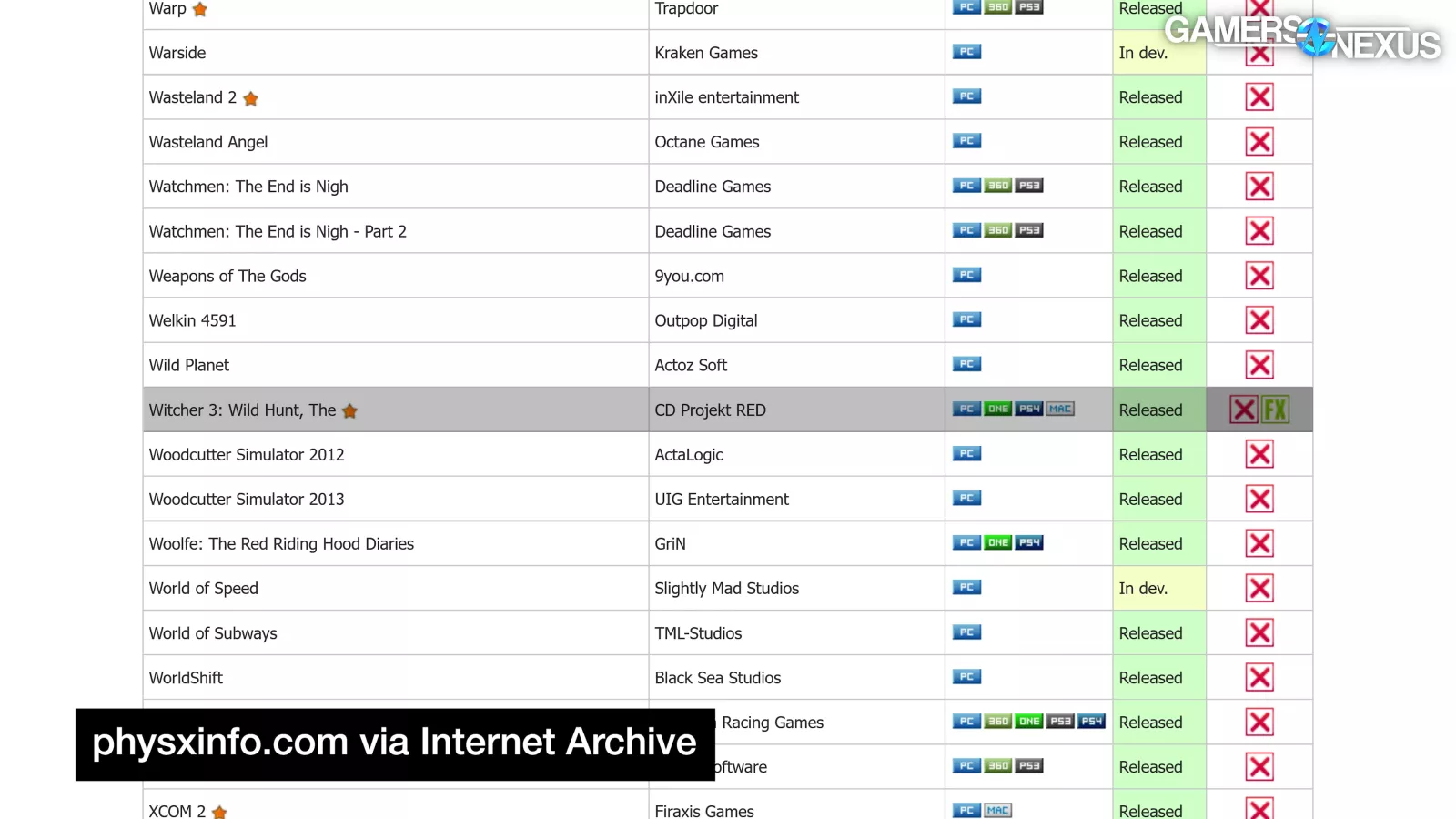

A user on the ResetEra forum has compiled a list of 32-bit games with PhysX based on a WikiLists article; big thanks to those guys for rescuing lists from rules-obsessed Wikipedia editors and their crusade to convert every list into a useless categories page. Yes, this bothers us.

PhysX has had a long, long history, and it's not actually gone. For example, newer games with 64-bit PhysX shouldn't be affected.

We're particularly confused by the February 18th Tom’s Hardware article that leads with the statement that "As far as we know, there are no 64-bit games with integrated PhysX technology," but it then mentions Metro: Exodus and The Witcher 3, both of which are 64-bit games.

Further adding to the confusion, PhysX doesn't HAVE to run on a GPU, so even removing GPU acceleration doesn't necessarily completely kill the feature in games.

PhysX Background: "The Way It's Meant To Be Played"

In order to better explain the current situation, we have to explain what PhysX was trying to be.

PhysX was part of NVIDIA’s SDK suite for developers that attempted to make it easier to integrate higher-quality graphics effects, with the downside being that it’d run either exclusively or minimally just better on NVIDIA hardware. This is a familiar story even to today. NVIDIA also drives integration by providing engineering resources to game developers. The company used to send engineers out to different game developer campuses to help them program and integrate features in their games and while they were there, they would sometimes optimize drivers.

To paraphrase Wikipedia, PhysX was originally developed by NovodeX AG, which was acquired by Ageia in 2004, which was acquired by NVIDIA in 2008.

It originally ran on discrete PPU (or Physics Processing Unit) accelerator cards, but NVIDIA adapted the tech to run on CUDA cores. We're only concerned with NVIDIA PhysX titles here: pre-2008 games from the Ageia era already required the PhysX Legacy Installer, and we're not going far enough down the rabbit hole to test whether that still works.

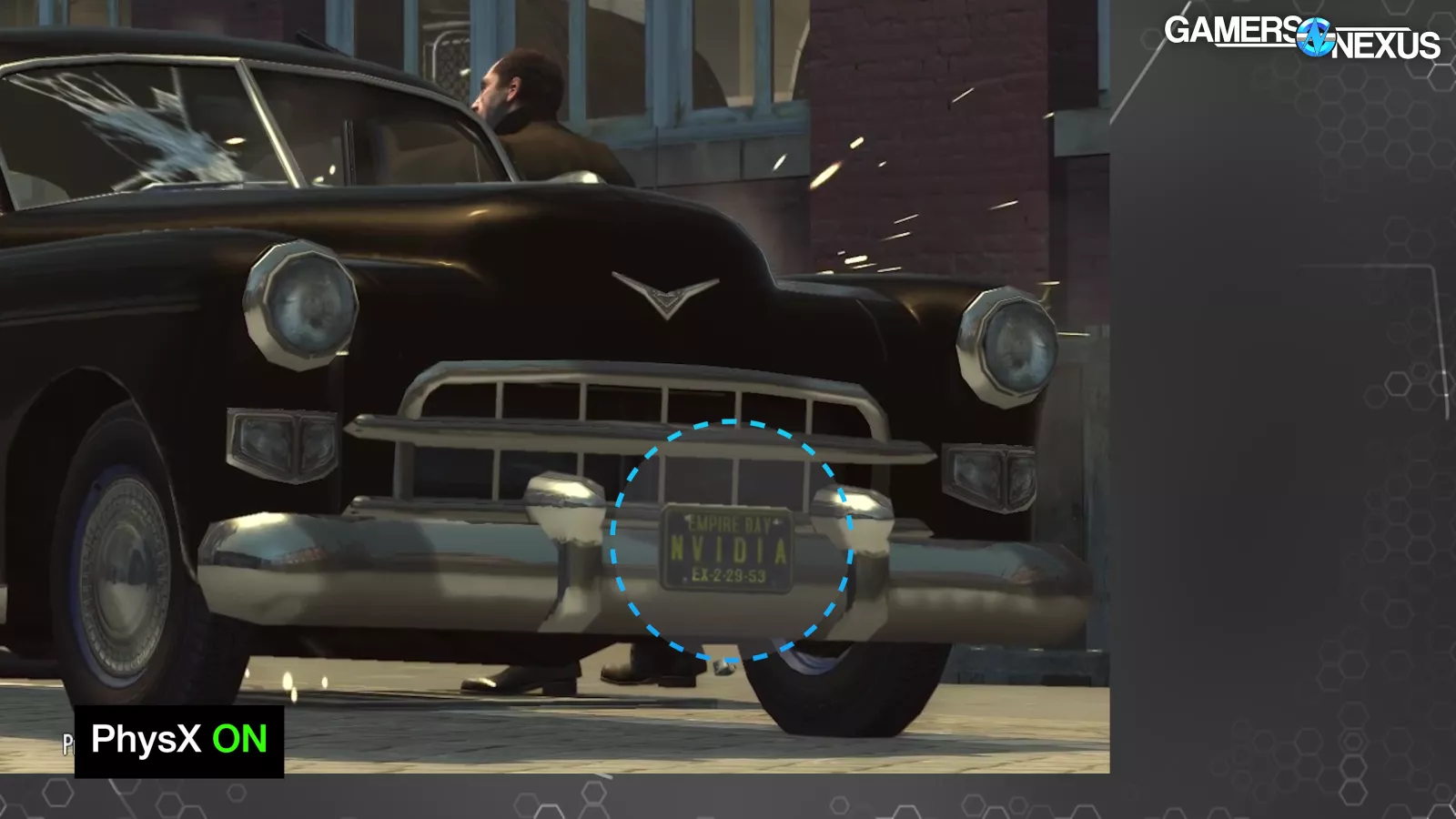

PhysX is a physics engine SDK, usually associated with destructible environments, ragdolls, fluid, fabric, interactive fog, and particles in general. NVIDIA's tagline for games it collaborated on in the heyday of PhysX was "The Way It's Meant To Be Played," and PhysX was the poster child for that philosophy. Games like Mafia II look dramatically different with PhysX enabled; The developers even put NVIDIA’s name on the cars during the benchmarking scene, turning the NVIDIA experience into literally the way it's meant to be played.

If you turn off PhysX in games like Mirror's Edge, it straight up removes objects from scenes. If you were using AMD or (GabeN forbid) Intel graphics in 2010, you were getting inferior versions of the highest-profile AAA games.

PhysX can be run on CPUs, but historically not well, which is obviously to NVIDIA's advantage.

David Kanter, who has appeared many times on our YouTube channel as a technical analyst (including for our Intel fab tour), finally gets his “I told you so” moment. It took 15 years, but he can finally say it.

As David Kanter said in 2010, "The sole purpose of PhysX is a competitive differentiator to make Nvidia’s hardware look good and sell more GPUs." He complained that "PhysX uses an exceptionally high degree of x87 code and no SSE, which is a known recipe for poor performance on any modern CPU. [...] Even the highest performance CPUs can only execute two x87 operations per cycle," meaning that performance will always remain bad, even with 2025-era CPUs.

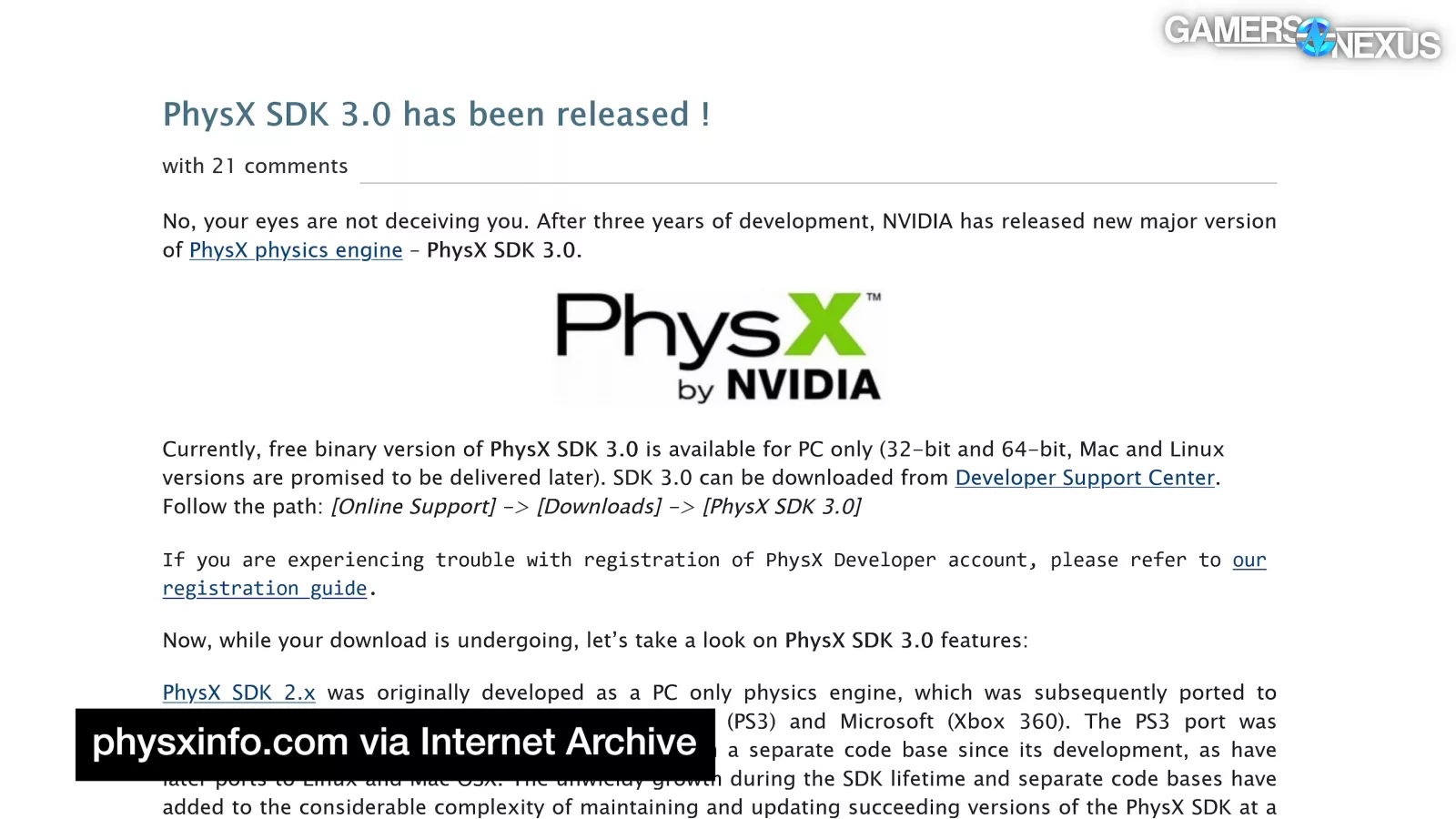

NVIDIA responded to Kanter back then by stating that PhysX 2.X "dates back to a time when multi-core CPUs were somewhat of a rarity." NVIDIA noted that it was still possible to implement multithreading and seemed to place the blame on developers, further stating that SSE and multi-threading support would be improved. PhysX 2.8.4 "with the SSE2 option to improve performances in CPU modes" was released a month after Kanter's post, so he can claim accomplishment there, and PhysX 3.0 was released in 2011 with "effective multithreading."

Our understanding is that David Kanter’s post back then created a firestorm within NVIDIA back in that time.

All of that puts the 32-bit games from that era between a rock and a hard place, since these are the games that are now losing GPU acceleration AND run like shit even on modern CPUs.

We've seen games as late as 2014 (Borderlands: The Pre-Sequel) that appear to use PhysX 2.X. In contrast, some more modern post-2.X games like The Witcher 3 don't offer GPU acceleration at all, instead forcing PhysX to always run on the CPU.

If PhysX really did prioritize CPUs starting with 3.0, it would explain why NVIDIA suddenly lost interest.

With the release of 3.0, "GPU hardware acceleration in SDK 3.0 is only available for particles/fluids," which helps explain why The Witcher 3 with its PhysX clothing isn't GPU accelerated (although NVIDIA had originally claimed that it would be).

The last time we thought about PhysX was with NVIDIA FleX from 2014, most notable in our minds for being completely broken in Fallout 4 since the 20 series. There are still engineers toiling away within the NVIDIA Omniverse framework, though, with the most recent PhysX release at time of writing being 5.5.1 from early February.

We agree with Kanter’s analysis of the time and see many parallels to today. It’s not wholly bad to advance graphics in step with developers if you’re the hardware vendor, it’s just that lock-in that becomes problematic. We sort of saw this with Intel’s first version of APO, where the feature only worked on CPUs Intel wanted to sell, but not CPUs that could truly benefit from it.

Performance Testing

We selected five 32-bit games for testing: Batman: Arkham City, Borderlands 2, Mafia II, Metro: Last Light, and Mirror's Edge. We rejected Assassin's Creed IV Black Flag because it has an FPS cap, but more importantly (as AMD owners may already know), you aren't given the option to use PhysX at all without GPU acceleration in that game. This is especially galling because the big PhysX update for Black Flag in 2014 appears to have brought the PhysX version up to 3.3.0, so there's a chance it might not run completely terribly on a CPU if that were allowed.

And that brings us to another point: Nothing in this article is news to AMD users. AMD users are used to getting shafted in these situations, but may feel vindication knowing that owners of $2,000 GPUs can’t properly play games from 15 years ago.

Judging by the admittedly not-very-scientific method of opening the PhysX .DLLs and doing a Ctrl+F search for version numbers, all of the games we’re testing appear to be using PhysX 2.X. Remember, pre-3.X PhysX games are likely to run poorly without GPU acceleration. Mirror's Edge uses 2.8.0, Mafia II and Metro: Last Light use 2.8.3, and Borderlands 2 and Batman: Arkham City use 2.8.4. These last two could therefore have better CPU performance due to the added SSE2 option, depending on the developer's implementation.

We used the latest compatible driver for each GPU, which was 572.47 for the 5080 and 980 and 391.35 (March 2018) for the 580.

We also used the PhysX System Software version that shipped with each of those driver packages, so 9.23.1019 for the newer cards and 9.17.0524 for the 580.

All games were run at 1080p on our 9800X3D GPU test bench, and GSYNC was universally disabled (as always). ReBAR was enabled where possible (the 580 doesn’t support it), and CSM was enabled by necessity for the GTX 580. Installing the 980 as a secondary GPU pulled 8 lanes from the primary PCIe slot, so all 5080+980 results were run in x8/x8 mode.

The NVIDIA control panel was used to manually assign PhysX processing to each device, and we confirmed that PhysX processing with the 5080 was always performed on the CPU regardless of assignment in these specific games. 64-bit PhysX games should run normally with GPU acceleration on 50 series, but that's not what we're testing. We also didn't test the 980 with CPU PhysX; it was tempting, but outside of our scope.

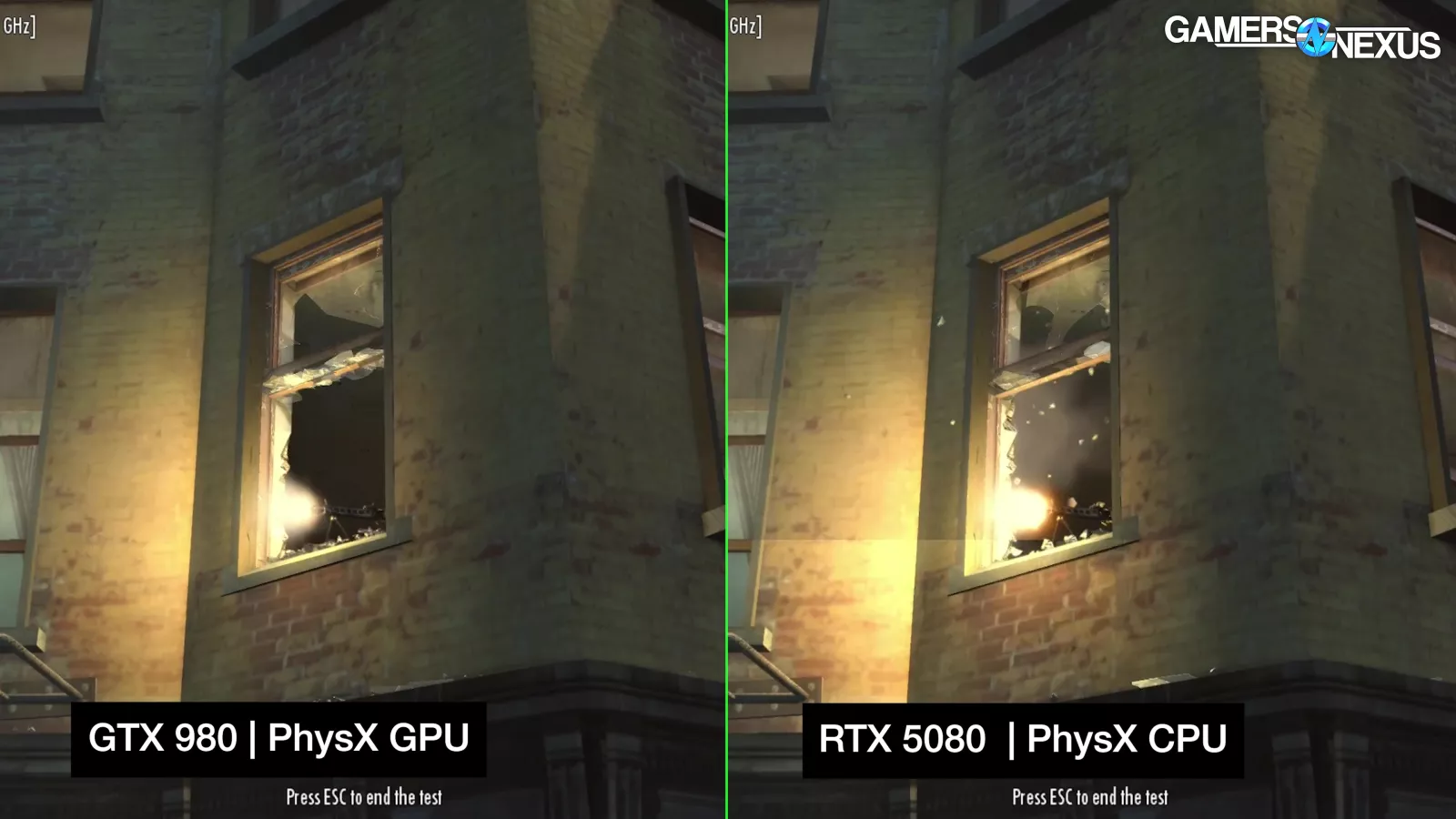

Comparing PhysX performance is a little tricky because performance depends on the scene. Mirror's Edge runs fine on 50 series cards until broken glass shows up, for example. The benchmarks we've selected here are worst-case scenarios that feature heavy PhysX, since that's what we're testing.

Mafia II 1080p Max (2010)

Mafia II is up first, mostly because the canned benchmark scene starts with the explosion of two cars plated with “NVIDIA” after they’re shot to pieces.

Mafia II has pros and cons as a test candidate. It was a flagship PhysX title with prominent effects, but on Steam the original version is now only available as a bundle with the 2020 remaster, which makes it less likely that players will revisit the original. Not everyone was happy with the remake, though.

Mafia II really went in with NVIDIA at the time. The game uses NVIDIA APEX, which included modular PhysX components like clothing and particles. NVIDIA noted that "when the APEX PhysX setting is at Medium or High, the game will utilize both your CPU and a suitable NVIDIA GPU" and "clothing effects will always run on the CPU unless you have a second PhysX-capable NVIDIA GPU dedicated solely to PhysX" and "APEX Clothing effects [...] are the most strenuous aspect of the game." That could mean that PhysX on the CPU has a chance here. We maxed out the settings and set APEX PhysX to High when enabled.

The APEX Clothing effects are obvious if you're looking for them, but really this benchmark is a showcase for rubble: chunks of concrete and glass being blown a part, and wood scattered across the scene with PhysX on, and there's no replacement for them with PhysX off: they just disappear. This is another game where the "real" experience requires PhysX.

Here’s the chart. It’s insane.

With PhysX on, the RTX 5080 is the worst performer in the chart. That’s right: Over $1,000 to get performance worse than the GTX 580.

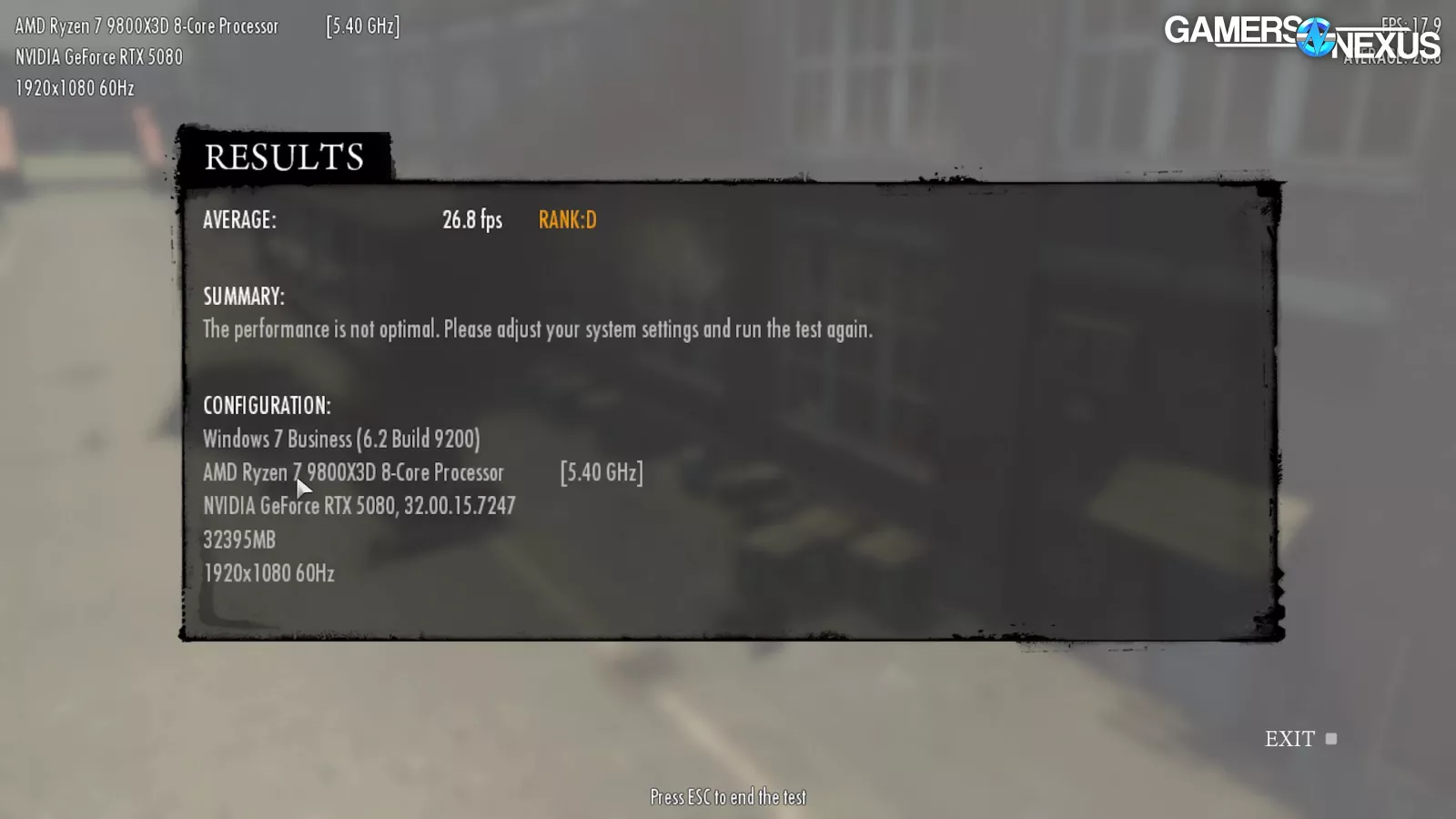

Even more insulting than the chart, Mafia II's benchmark concludes with a graded result, and the 5080 got a D. It told us, "The performance is not optimal. Please adjust your system settings and run the test again." This is coming from a game so old that it thinks Windows 11 is Windows 7. It averaged 30.3 FPS with wild variation between individual benchmark passes, showing behavior nearly identical to the GTX 580 when PhysX processing was assigned to the CPU with that card, including the variation between passes. With PhysX processing done on the GPU, the GTX 580 is 85% ahead of the 5080, with improved lows as well. Just to reiterate: This card is 15 years old and it is outperforming the RTX 5080.

On the 580, GPU PhysX is 81% ahead of CPU PhysX (at 56 to 31 FPS), and CPU PhysX clearly sucks in general. So far that lines up with our theory about 2.8.4 onwards being more CPU-favorable, since Mafia II appears to be on 2.8.3.

Turning PhysX off gives the 5080 a 1,267% uplift to 414 FPS, so it may be worth taking that route if you're stuck with a low-performing 50 series card and can’t afford to upgrade to a GTX 580 anytime soon. Of course, if you can upgrade from a 5080 to a GTX 980, there’s room for a 198% improvement.

Alternatively, traveling back to 2006 and adding in a dedicated PhysX accelerator paired with the 5080 (a GTX 980 as the accelerator, in this case) gave a respectable 441% uplift to 164 FPS average. 0.1% lows were at 60 FPS and made it the best balance of performance and visuals on this chart. NVIDIA has finally achieved what it always wanted: You now need a dedicated accelerator GPU for physics processing, it just took them 15 years to do it.

Metro: Last Light 1080p VHH (2013)

2013’s Metro: Last Light is technically the most recent release on this list, although it's still a 32-bit application—or, as NVIDIA spun it back in 2013, "thanks to a highly efficient streaming system Last Light’s world uses less than 4GB of memory."

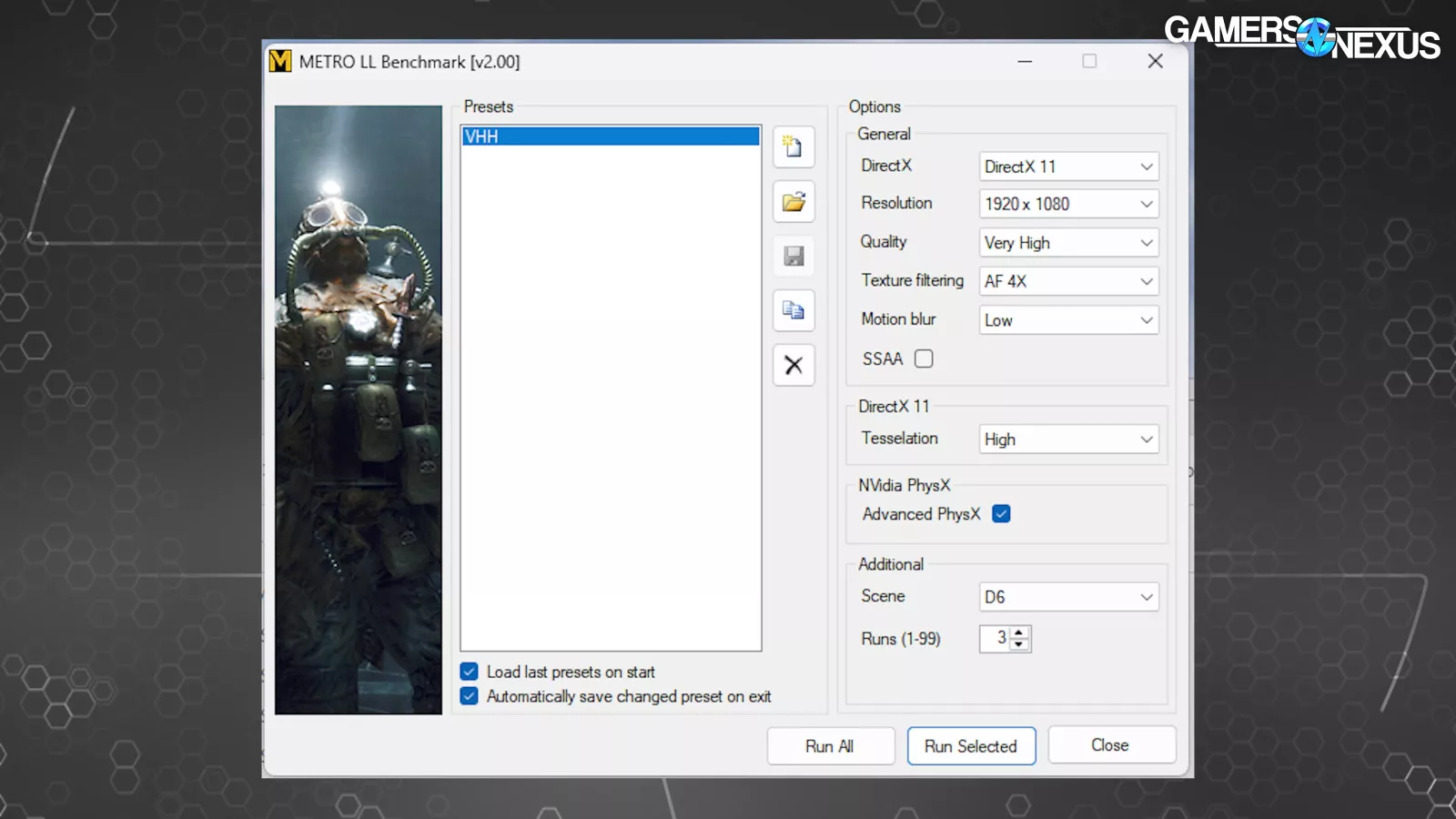

"Advanced PhysX" is an on-or-off toggle for all PhysX effects, which NVIDIA categorized as Debris & Destruction, Explosive Enhancements, Fog Volumes, and Cloth.

We've spent many, many hours benchmarking Metro: Last Light, so for old times' sake we used our old VHH graphics preset: "Very High" quality, AF 4X texture filtering, low motion blur, no SSAA, and high tesselation. This was one of the benchmarks that built GN in the early days.

Last Light includes an unusually user-friendly standalone benchmark that lasts three minutes without loading screens, which is why we used it so frequently back in the day. Initially, there's not a dramatic difference with PhysX on versus off until about two minutes in, when explosions and gunfire start to blast chunks of concrete across the scene. Visually, the developers did a fairly good job of making up for the lack of PhysX when the option is disabled (at least in this scene). PhysX helps, but disabling it won't ruin the game. On a GTX 980, it looks great. It’s also not bad on a GTX 580. The 5080 is a different story. It’s bad.

In all tests with the 5080 (including the 5080+980 combined test), the animation is bugged out for the train at the beginning of the scene as it comes to a stop.

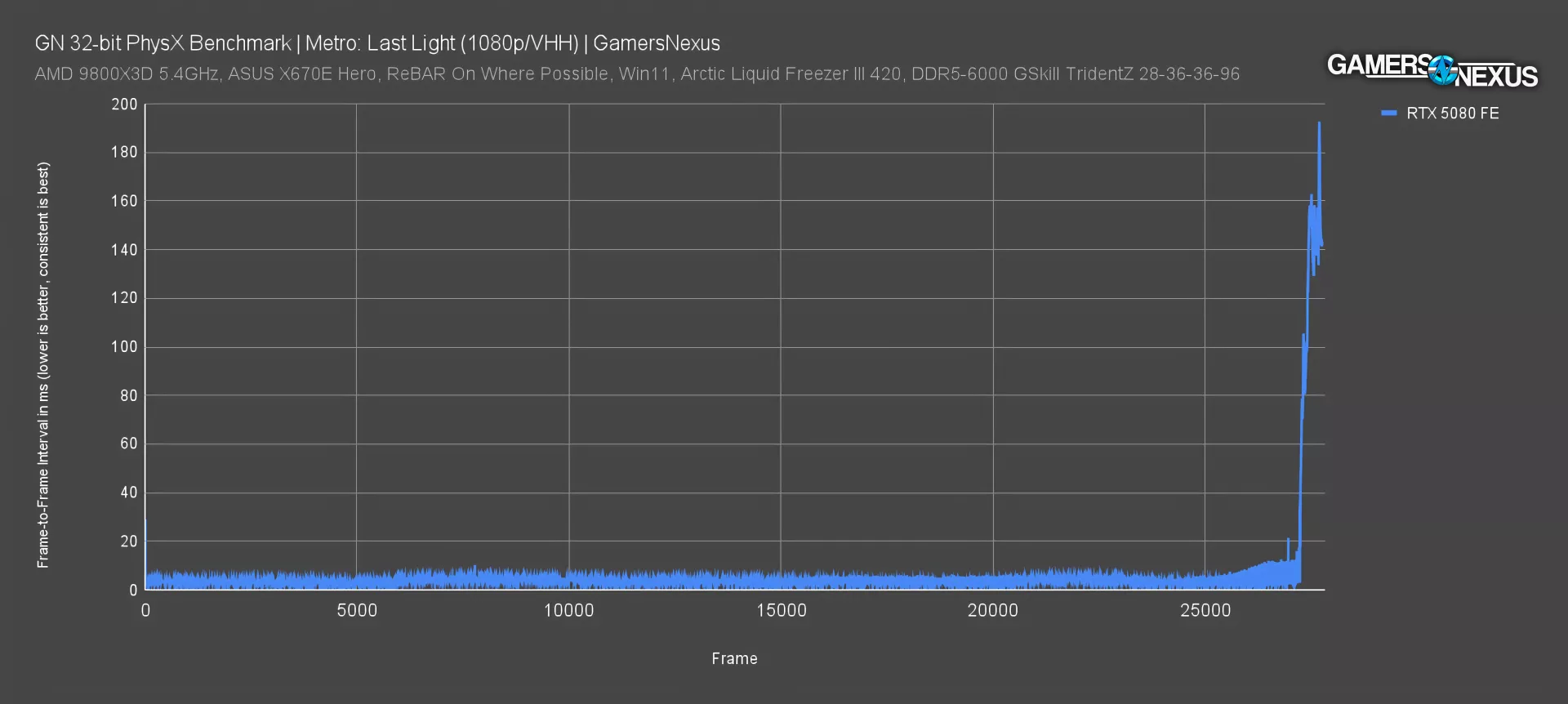

As we can see on this frametime plot, things are overall OK until the end. Performance ruins the experience and completely tanks on the 5080 and 9800X3D (read our review) as soon as the PhysX effects kick in. This makes it unplayable. If you watch captured footage of the benchmark, you can see that the sharp spike at the end of this plot makes up a full third of the benchmark chronologically, with framerates dropping down below 10 FPS for the last minute straight. It doesn’t look that way on the chart because of how the frame time chart plots, but this is a significant portion of the test time.

Note that we're logging the entire benchmark run here, so these bar chart results aren't comparable to our ancient GPU charts. The early non-PhysX part of the bench helped the 5080 to score relatively high in terms of average framerate, but the exceptionally poor 1% and 0.1% lows are due to the performance completely falling apart as soon as explosions and gunfire start up. It is totally unplayable, which is unfortunate, because NVIDIA said PhysX is the way it’s meant to be played.

Even the GTX 580 (with GPU acceleration) coped with this section of the bench better, as indicated by its superior 22.3 FPS 1% and 19.2 FPS 0.1% lows. Watching the benchmark in action makes it clear that the 5080 completely failed this test.

The best playable framerate scored with PhysX was with the 5080 and 980 working together, paying homage to Ageia as it was meant to be. The two averaged 252 FPS with much more reasonable lows. Disabling PhysX on the 5080 vastly improved performance as usual, but that's not a reasonable option in this game. Although this one copes better than Mafia II, it does still totally change the experience. AMD players have lived with this reality for over a decade, but now NVIDIA’s own customers -- for those who play older games -- get to experience vendor lock-out.

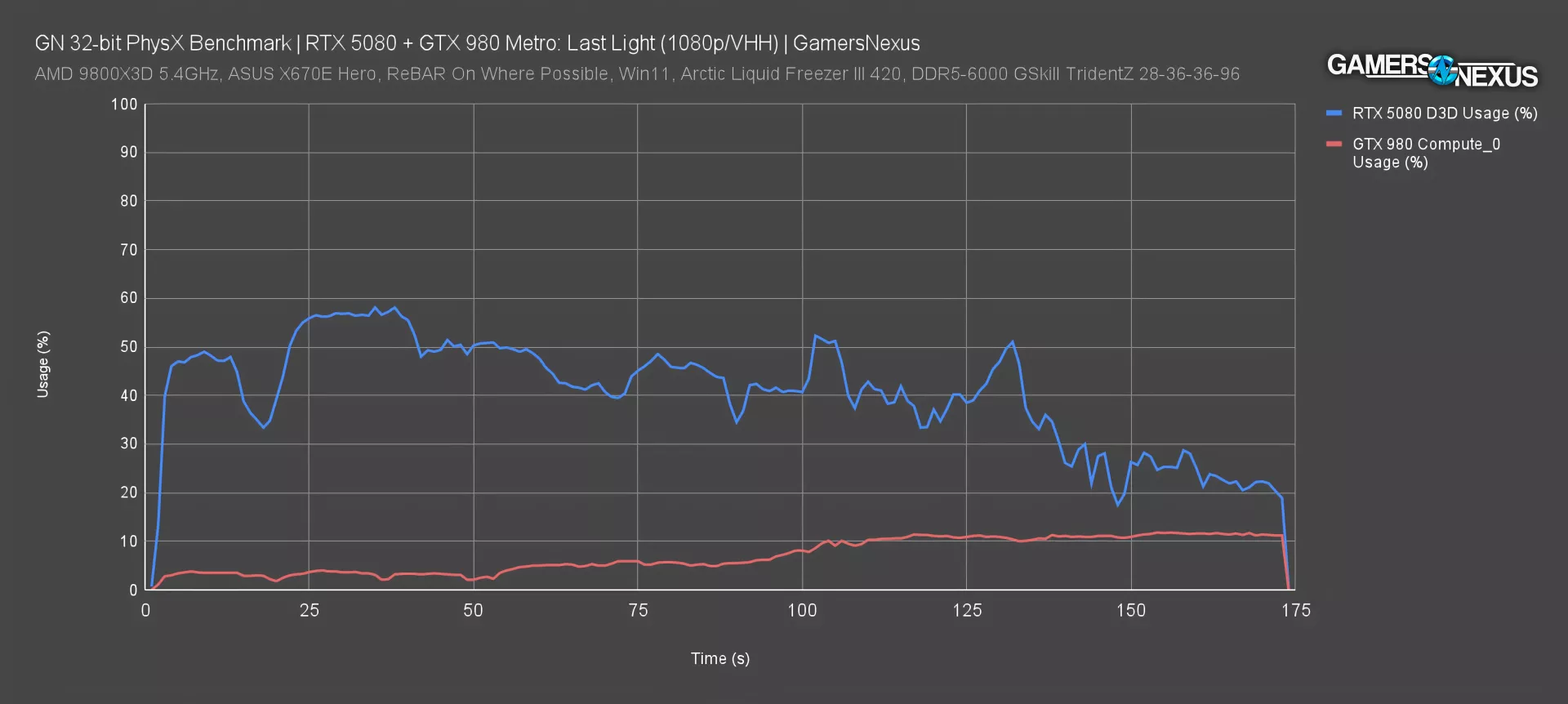

Out of curiosity, we also ran a HWiNFO log during a pass of the Last Light benchmark with the paired 5080 and 980. For the 5080, core load and HWiNFO's "D3D Usage" metric had a near 1:1 correlation, and for the 980, core load and the "Compute_0 Usage" metric had a similar correlation. Plotting those two against each other reveals the point at which the PhysX effects kicks in, with the 5080's usage dropping over the course of the benchmark as the workload bottlenecks elsewhere, while the 980's usage rises at the same time. The 5080's bus load was maxed out during the whole bench run, which makes some sense given the reduction of PCIe lanes.

Mirror's Edge 1080p Max (2008)

Mirror's Edge is the oldest game on our list. There aren't many graphics options, and PhysX is a simple on-or-off toggle. But the graphics load in Mirror’s Edge was absolutely brutal for its time, with glass breaking in particular causing major problems for hardware that couldn’t handle it. Natively, the game is capped at 62 FPS. We were forced to edit the config file to uncap the framerate for testing, which would reportedly lead to a softlock later in the game. This is another game where "just turn PhysX off" is a potential argument, even though we don't agree with it. We'd argue that the PhysX version of the game is superior, with lots of broken glass, fog, and fabric that's frequently completely missing with the setting off, but the PC version with added PhysX was only released a couple months after the original console launch.

Here’s the chart.

The built-in Mirror's Edge flyby bench was inadequate, so we selected a simple bench path that includes breaking glass, which seems to be the major trigger for poor performance in this title. The 580 outperformed the RTX 5080 when GPU-accelerated PhysX ran on the 580 to the 5080’s CPU PhysX. Although the average framerate was roughly tied, the lows on the 5080 FE indicate huge problems with frametime pacing that ruin the experience. Just like Mafia II, the run-to-run variance was extremely high with CPU PhysX on both the 5080 and 580.

The solo GTX 980 beat the 5080 by 36%, which isn’t the way those numbers are supposed to go. The 5080 + 980 pairing beat the solo 5080 by 276% with significantly improved lows and the experience is a lot better. The retired press from the PhysX era can all finally say, “I told you so.” Of the charted results, the paired cards scored the best. The 5080 with PhysX disabled showed 475% uplift, but that's at the cost of removing PhysX items from the scenery, and even with PhysX off, the 5080's lows were weak.

As with Mafia II, GPU-accelerated PhysX performed significantly better on the 580 than CPU PhysX, another possible indication of kneecapped CPU performance in the older PhysX versions.

Borderlands 2 1080p Max (2012)

Borderlands 2 is up next.

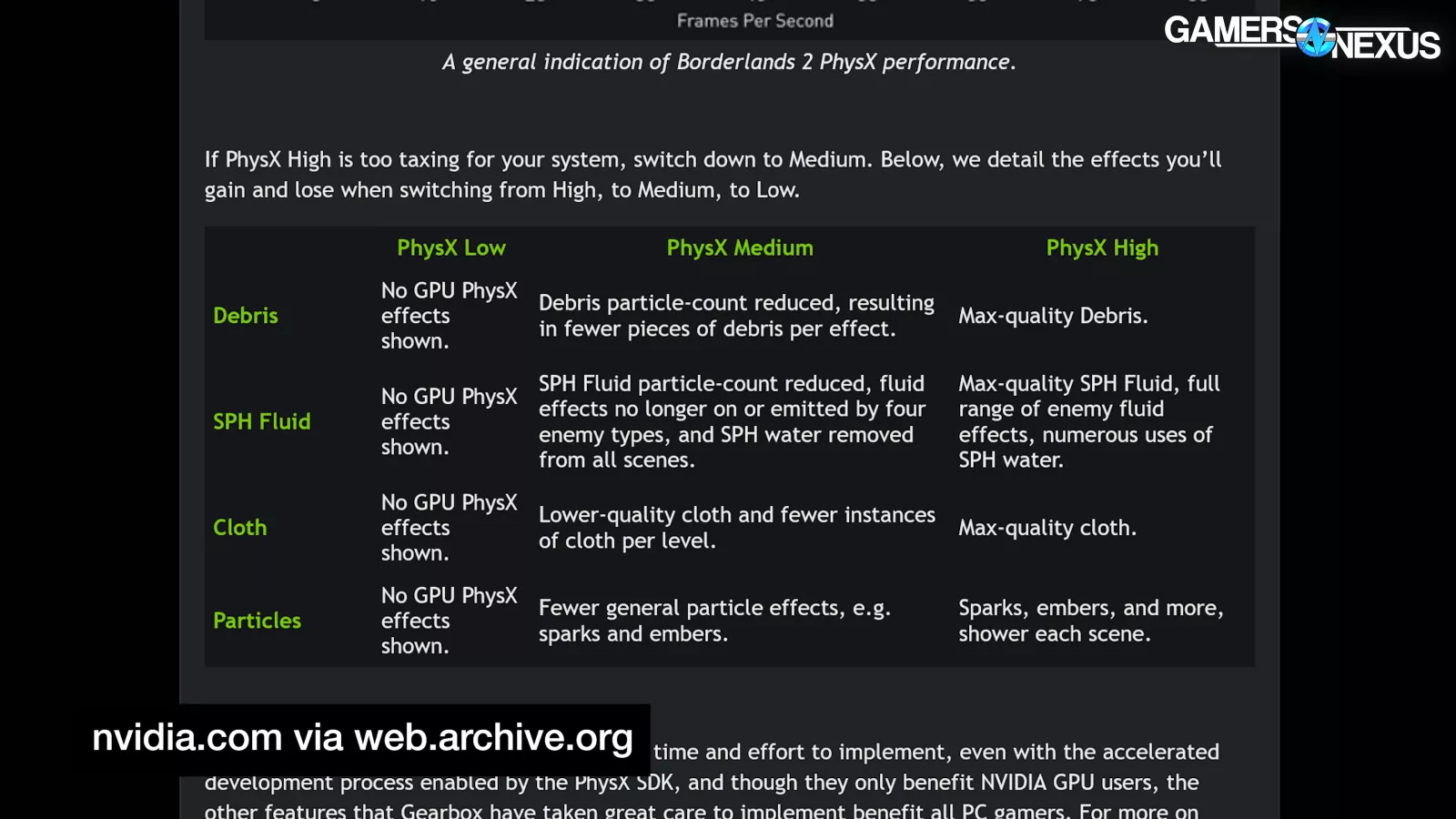

We've confirmed that the "Low" setting for PhysX in Borderlands 2 turns off all visible effects, so we're labeling that setting as PhysX Off on the chart. PhysX effects include debris, cloth, particles, and Smoothed-Particle Hydrodynamics (SPH) fluid. We maxed out the settings and set PhysX to High when enabled. Borderlands 2 appears to grey out the PhysX option when no compatible card is detected, but this doesn't actually affect the setting, it just stops you from adjusting it without manually editing the config file. Great job, developers.

Borderlands 2 contains a built-in PhysX test scene that's accessible with some light modding. This offers a good overview of how the game copes with a lack of PhysX: some liquid and cloth is replaced with flat textures, some completely disappears, and sparks and rubble are completely eliminated. As with the other titles we've tested, "just turn PhysX off" isn't acceptable advice: it's a huge part of the game's visuals and it’s what NVIDIA and this game used in its marketing. AMD users of the era can, once again, finally feel heard and vindicated.

Here’s the chart.

The 5080 avoided landing at the absolute bottom of the chart here, but this is a more CPU-friendly PhysX 2.8.4 title, as we can see from the fact that CPU PhysX on the 580 scores higher than GPU acceleration on the same card. Still, the 980 with GPU acceleration outperformed the 5080 by 7% -- and we even used a 5080 with all of its ROPs. The 5080 plus 980 combination failed to launch at all in this title and isn't included on this chart.

Turning PhysX off gained 447% performance for the 5080 with an average of 518 FPS, but again, that's not an option anyone should recommend. You should be able to play a game from 13 years ago on a 5080 without disabling core features.

Batman: Arkham City 1080p Max (2011)

Batman is up next.

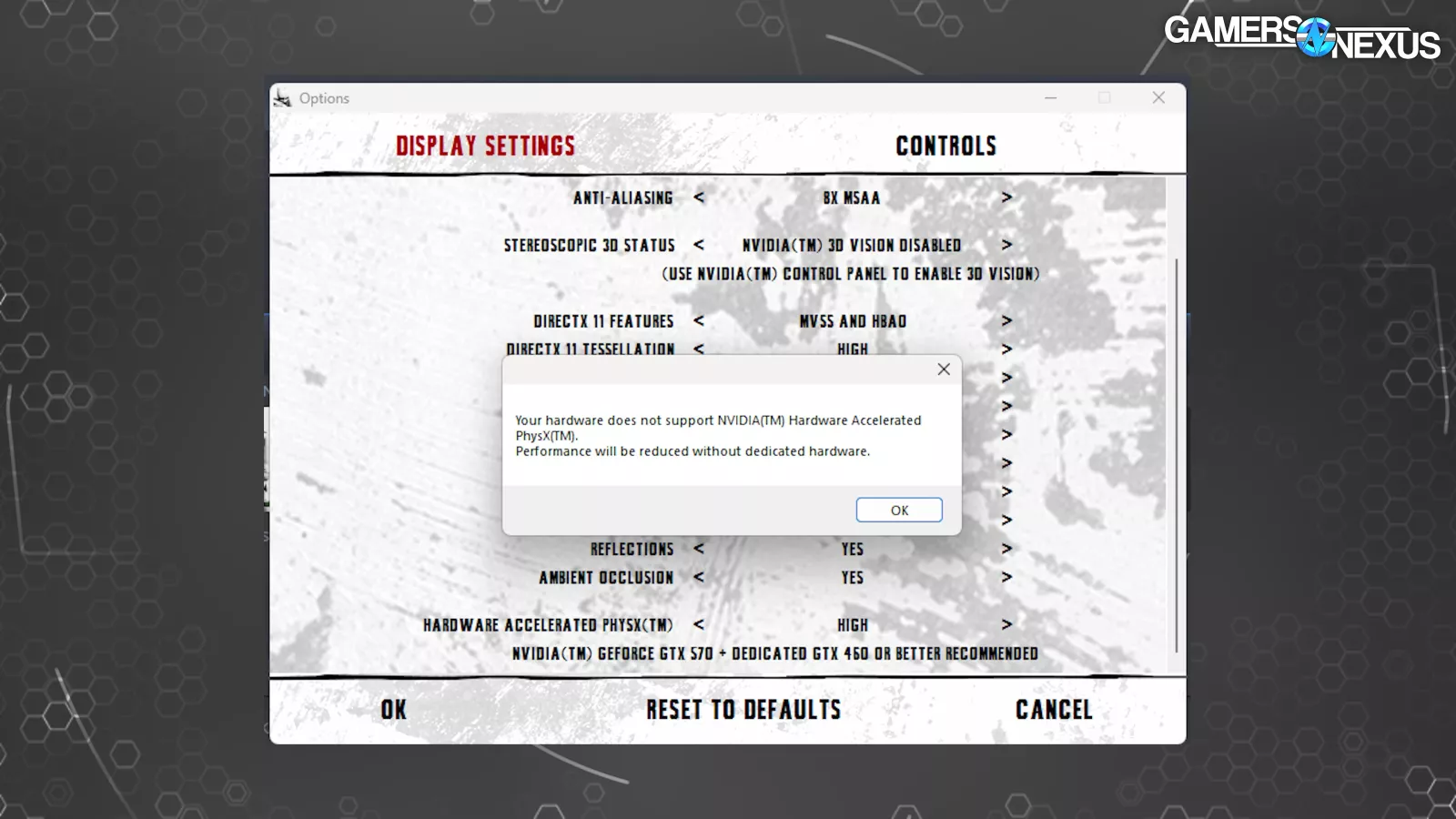

Other Arkham games would have worked, but we picked Arkham City since it's a little newer than Asylum. In Arkham City, PhysX handles newspapers, flags, ropes, fog, sparks, glass, and general destruction. We maxed out the settings with PhysX set to High when enabled. When we tried this with our 5080, the game noted that our performance would be reduced; it suggested installing a nice GTX 570 instead with a GTX 460 as a dedicated PhysX card, which it accurately says would be an upgrade from our 5080. And you could do just that: You could go buy a used, older card if you’re an enthusiast of these games but want 50-series hardware.

The game has a built-in benchmark that features heavy use of PhysX in several scenes, and we logged performance for the entire duration across all of them. Unfortunately, the scenes are relatively short and have black loading screens between, which can artificially inflate framerates. Examining our frametime plots showed us that the black screens caused frametime spikes rather than dips, but these were relatively short. For a more serious review -- not that a benchmark using a GTX 980 and RTX 5080 in the same system to test a 14-year-old game isn’t serious -- we'd never log across loading screens like this.

The benchmark makes it very clear that PhysX is necessary to see the game as it was intended to be seen, especially the segment where display cases break and shower the floor with glass that's completely absent without PhysX.

There was serious stuttering on all GPUs other than the GTX 580. That could either be because the framerates are far above what the developers planned for, or because the 580 used a different driver than the rest.

The 5080 is off to a promising start here at 152 FPS average, giving it a 73% advantage over the GTX 980's GPU accelerated result. However, we can also see that going from PhysX on to PhysX off with the 5080 resulted in a 248% performance boost up to 529 FPS, while the same change on the 980 "only" increased performance by 59% up to 140 FPS. That's because the 980 is processing PhysX onboard, while the 5080 necessitates running it on our 9800X3D instead, which is a huge bottleneck.

The 580 results are also interesting, with the PhysX CPU result actually 12% ahead of the PhysX GPU result. As we mentioned, this is a PhysX 2.8.4 title that may make use of the more CPU-friendly SSE2 instruction set, so it makes some sense that the CPU performance isn't completely abysmal.

The Arkham City graphics menu recommends running a secondary card as a dedicated PhysX processor, which is exactly what we did with our 5080 + 980 test. Ignoring the stuttering issues, this was the best result on the chart, allowing us to enable PhysX effects while averaging 215 FPS. It shouldn’t be a surprise that, of cards dating from 2025 to 2010, the 2025 card is the best -- but it is, and only when paired with the 2014 card. That's a 41% uplift gained by adding in an 11 year old graphics card. In terms of frametime consistency, the winner is the 580.

Conclusion

It's not the biggest deal in the world that a list of games from 12-15 years ago, several of which have been remade or otherwise re-released, are now more difficult to run on PC. That’s not the concern here.

The broader issue is that NVIDIA has a habit of trying to come up with exclusive graphics tech for games. The exclusive part is key. For example, RTX-specific ray tracing features, DLSS, MFG, 3D Vision, Ansel, TXAA, WaveWorks, MFAA, DLAA, HBAO+, Reflex, and HairWorks.

You get the point. This drags the rest of the industry along in its wake, and then when they abandon the tech down the line, the customers are left holding the bag. That's NVIDIA's prerogative as the eternal market leader, but it doesn't make it feel any better for us suckers with 3D Vision monitors and glasses in our attics. And we actually liked 3D Vision.

But it’s interesting: NVIDIA is making decisions on graphics, which isn’t all that controversial. It’s the implementation that’s problematic. It isn’t necessarily an anti-trust issue to decide to use its power and development resources to force improvements to graphics or to make game development easier in a way that benefits the technology provider that’s in that position; however, it can become one depending on how NVIDIA decides to execute on that vision, and that needs to be carefully monitored if it maintains its 90/10 split in the market.

The bigger problem today is just that this move came as an unexpected (to us) side effect of the removal of 32-bit CUDA support in new cards, which appears to have blindsided everyone and could have other downstream effects beyond PhysX. For example, PassMark recently noted that 5090 and 5080 compute performance was unexpectedly low in benchmarks due to OpenCL 32-bit support breaking without warning.

This is also an issue for game preservation in ways that feel related to Stop Killing Games, but for different reasons.

It's very likely that some of these games could be tweaked to run better with CPU PhysX by swapping .DLLs or otherwise modding them, but we can't take it for granted that some genius on a forum will always be there to clean things up, and that's not the point. You could spend thousands of dollars on a brand-new NVIDIA GPU, and in the best-case scenario you'll still have to do extra work to get these games playable with NVIDIA's own abandoned feature.

We don't have a decisive call to action here. In the short term, we think NVIDIA could probably fix PhysX; there are probably plenty of reasons that it would be difficult, but we're willing to bet that with a $3 trillion market cap, they can figure out a way to get Borderlands 2 running at a stable framerate on a 50-series GPU.

In the long term, this feels like a grim omen for other NVIDIA-specific features that are integrated into games (especially if it’s core to how that game feels), or even newer PhysX games—we seriously doubt that Fallout 4 is the only half-assed broken implementation of 64-bit PhysX.

Fifteen years from now, who knows whether features like Reflex and MFG and DLSS and even ray tracing (as it is done today) will be compatible with the current hardware. Ray tracing in particular seems like it’ll go through a paradigm shift at some point just because it’s so new.